Kubernetes Pods

How a group of containers becomes a pod. And the weird pause container Kubernetes provides.

This article requires you to have an understand how Kubernetes work in general.

Containers

Containers tend to operate alone in silos. If you set up a container environment by yourself, chances are, you have been doing some weird hard coding of IP addresses to get containers to communicate with each other. Sometimes it does make sense to group containers together, but we should keep each running application separate so that we can update/rollback our applications separately. Docker does provide some features to share namespaces with each other, so that multiple containers can work together in unison, rather than separate entities.

Pods

When you SSH into a node, have you wondered why there are so many weird docker images running, especially this container called pause?

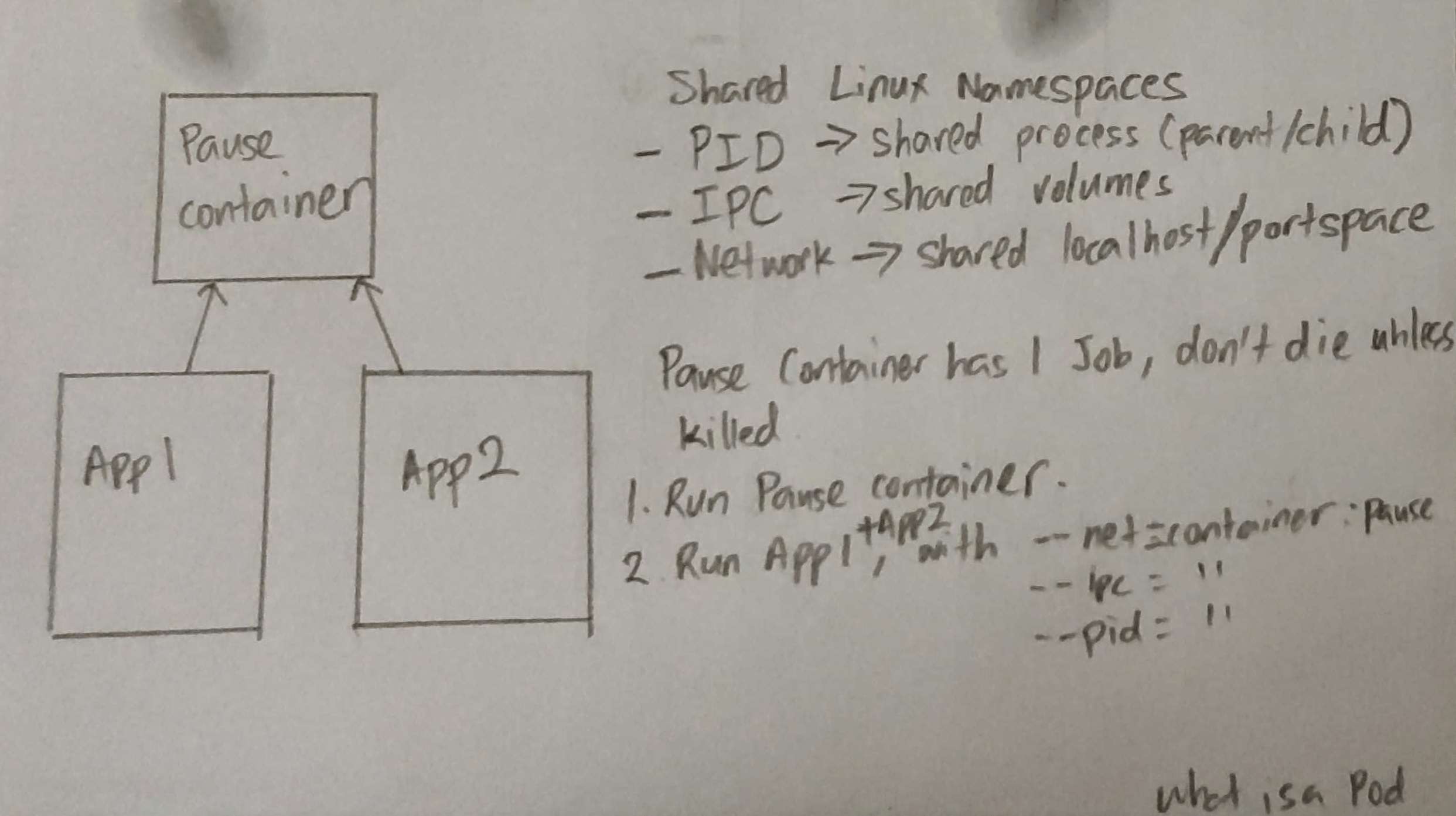

Before I explain what the pause container does, let's see what a pod actually is and requires.

Shared Network

Containers within a pod share the same localhost and port space. That is, you can call the other container within the same pod via localhost:<port number>.

Shared Volumes

Containers within the pod share the same volume. They have access to the same "local" files that is isolated within the pod. Volumes may be ephemeral or attached storage.

Shared Resources

Resource limits apply to all containers inside the pod. They share the same amount of CPU and memory limits.

Okay, how?

Namespaces (Linux, not Kubernetes namespaces) can be shared among containers/processes. Pods achieve all these by sharing namespaces among them. More specifically, they share the PID, IPC, and network. Well, the shared resources part is kinda done by cgroups but they can be done on a group of processes.

So lets say we have an application that requires an nginx and your binaries. To manually create a pod, you could do something like this.

docker run -d --name pause-app -p 8080:80 k8s.gcr.io/pause:3.1

docker run -d --name nginx -v `pwd`:/etc/nginx/nginx.conf --net=container:pause-app --ipc=container:pause-app --pid=container:pause-app nginx

docker run -d --name app --net=container:pause-app --ipc=container:pause-app --pid=container:pause-app app-image

error_log stderr;

events { worker_connections 1024; }

http {

access_log /dev/stdout combined;

server {

listen 80 default_server;

location / {

proxy_pass http://127.0.0.1:6000;

}

}

}And these three containers now live in the same pod. Notice that nginx is calling your application via localhost? This works because they share the same network namespace.

So what's this pause container? It's a container that does nothing other than to not die unless killed (https://github.com/kubernetes/kubernetes/blob/master/build/pause/pause.c). This allows you to connect your namespaces to a dummy container, like duct taping all your containers together to a strong base.