What are Containers? (Docker)

So recently, there's a lot of talk about all these buzzwords in the new age devops, Docker, Kubernetes and all that good jazz. What's in it for me?

What wrong with operations/development?

People tend to have different environments and sometimes the application works on one environment and doesn't on another. Why? Could it be the library dependencies of the software are different from one machine to another? Library conflicts? Maybe. So we all need a standard for shipping applications from the developers machine to development environments and production environments.

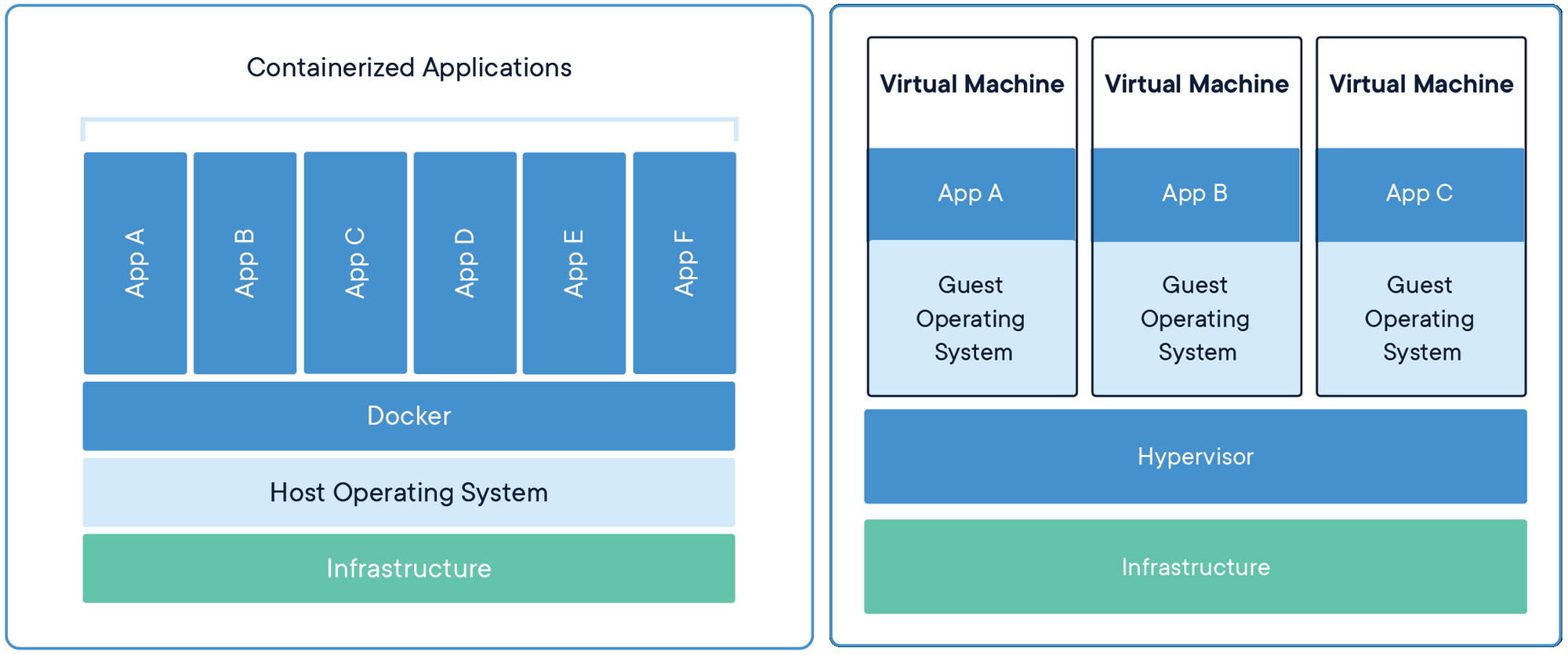

So why can't we just spin up another virtual machine using say... Vagrant?

We could. But what if we have so many applications? It's rather uneconomical to spin up a virtual machine for every single application we have. A virtual machine has quite the overhead in terms of resources just to spin up a small application.

So how does this "container" help me?

A container is basically your binaries, bundled together with the system dependencies that your application requires. So if your application runs on Alpine Linux or Ubuntu 16.04 and requires Node version 8 or 10, you can lock that container with the following programmes. It's very easy to write what you need in a container via a Dockerfile. So now, you only need 1 virtual machine or host machine, and you can achieve isolation with Docker containers without the use of expensive virtual machines!

What's the catch?

It's still not fully secure. You still need a virtualised/host sandbox so that nothing can escape the containers or prevent rogue users from accessing your containers.

Okay, I'm sold. How can I use it?

I run a few containers in my server.

- Ghost blogging platform

- My website

- My beta testing website

- Elasticsearch

- My Path of Exile search binaries.

For Elasticsearch/Ghost blogging platform or any other off the shelf applications, you can just read their docker guide to get it running, it's usually detailed enough for you to get it up and running.

So I will use my Path of Exile search binaries (https://github.com/ashwinath/poe-search-discord). There are two components to the application. One is a frontend that is built using webpack and all I do is to serve the static html/js/css files using my Node backend server. First, I need to write how I build the frontend. Which is rather simple.

#!/bin/bash

cd frontend

rm -rf build/

yarn

yarn build

cp -r build ../build/public

cd ..Simple right? Just go in, build, copy out to the parent directory and go out.

Next I need to build the docker container, with the instruction declared in the Doc kerfile.

FROM node:10.7 # https://hub.docker.com/_/node/ this using the debian image

WORKDIR /usr/src/app # declares where your binaries should be in the isolated environment

# copies the binaries from ur actual working dir to the docker isolation

COPY package*.json ./

# runs in the docker container

RUN yarn

COPY build ./build

RUN mkdir -p /var/log/poe-search

# since our app uses port 7000, we want to expose that to the host os

EXPOSE 7000

# tells the container how to run your application

CMD ["yarn", "run-prod"]So now that the recipie is done, we need to build the container for real.

#!/bin/bash

# Builds the container

docker build -t ashwinath/poe-search-bot $* .

# tags the container. latest has a special meaning in docker tags

docker tag ashwinath/poe-search-bot ashwinath/poe-search-bot:latest

# Push it to docker hub. You can alternatively push it to other repositories

docker push ashwinath/poe-search-bot:latest

# From here on you can ssh into ur server and deploy it.

# For me it's this giant command

ssh -o "StrictHostKeyChecking no" [email protected] -t "docker pull ashwinath/poe-search-bot && docker stop poe-search-bot && docker rm poe-search-bot && docker run --name poe-search-bot -e ES_HOST='10.15.0.5:9200' -e DISCORD_TOKEN=${DISCORD_TOKEN} -e ENV='PROD' -v /var/log/poe-search:/var/log/poe-search -p 7000:7000 -d ashwinath/poe-search-bot && docker image prune -f"

# what this does is to get the latest image, stop the old container, remove the old container, start the new container with the new image we built and run it with some environment variables. And finally we delete all old images.So, for me, all these are done by Travis CI and all I need to do is just to do a git push to Github and everything is done automatically for me. My repository does it differently as it uses a Makefile instead of just shell scripts. This is a matter of taste.

Conclusion

The problem I had was juggling between Node libraries, Ghost required a different version of Node from my side projects, also, my virtual machine didn't have enough RAM to do npm install so I resorted to Travis building my binaries and shipping them directly. I used to build them separately and SCP them in, managing the different version of nodes by putting the Node runtimes in /etc/alternatives, which I still consider a valid option to today's age for small scale. Even though docker is lightweight, you should really consider Docker if you really need that isolation.